papers

For a full list of publications with citations, please see my Google Scholar profile.

(* denotes combined first authorship)

2025

-

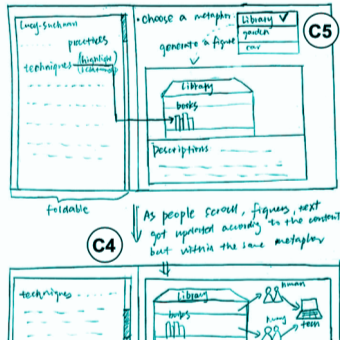

Towards Dialogic and On-Demand Metaphors for Interdisciplinary ReadingMatin Yarmand, Courtney N. Reed, Udayan Tandon, and 3 more authorsIn Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Apr 2025

Towards Dialogic and On-Demand Metaphors for Interdisciplinary ReadingMatin Yarmand, Courtney N. Reed, Udayan Tandon, and 3 more authorsIn Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Apr 2025The interdisciplinary field of Human-Computer Interaction (HCI) thrives on productive engagement with different domains, yet this engagement often breaks due to idiosyncratic writing styles and unfamiliar concepts. Inspired by the dialogic model of abstract metaphors, as well as the potential of Large Language Models (LLMs) to produce on-demand support, we investigate the use of metaphors to facilitate engagement between Science and Technology Studies (STS) and System HCI. Our reflective-style survey with early-career HCI researchers (N=48) reported that limited prior exposure to STS research can hinder perceived openness of the work, and ultimately interest in reading. The survey also revealed that metaphors enhance likelihood to continue reading STS papers, and alternative perspectives can build critical thinking skills to mitigate potential risks of LLM-generated metaphors. We lastly offer a specified model of metaphor exchange (within this generative context) that incorporates alternative perspectives to construct shared understanding in interdisciplinary engagement.

@inproceedings{Yarmand_CHI25_DialogicMetaphor, title = {{Towards Dialogic and On-Demand Metaphors for Interdisciplinary Reading}}, author = {Yarmand, Matin and Reed, Courtney N. and Tandon, Udayan and Hekler, Eric B. and Weibel, Nadir and Wang, April Yi}, year = {2025}, month = apr, booktitle = {{Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems}}, location = {Yokohama, Japan}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {CHI '25}, articleno = {1}, numpages = {19}, doi = {10.1145/3706598.3713698}, isbn = {97984007139412504}, url = {https://doi.org/10.1145/3706598.3713698}, } -

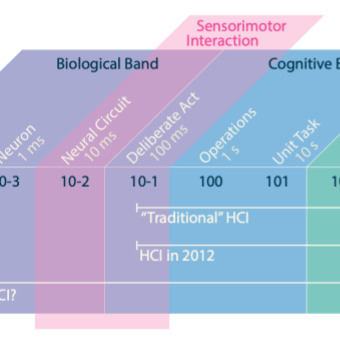

Sensorimotor Devices: Coupling Sensing and Actuation to Augment Bodily ExperiencePaul Strohmeier, Laia Turmo Vidal, Gabriela Vega, and 5 more authorsIn Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Apr 2025

Sensorimotor Devices: Coupling Sensing and Actuation to Augment Bodily ExperiencePaul Strohmeier, Laia Turmo Vidal, Gabriela Vega, and 5 more authorsIn Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Apr 2025An emerging space in interface research is wearable devices that closely couple their sensing and actuation abilities. A well-known example is MetaLimbs [39], where sensed movements of the foot are directly mapped to the actuation of supernumerary robotic limbs. These systems are different from wearables focused on sensing, such as fitness trackers, or wearables focused on actuation, such as VR headsets. They are characterized by tight coupling between the user’s action and the resulting digital feedback from the device, in time, space, and mode. The properties of this coupling are critical for the user’s experience, including the user’s sense of agency, body ownership, and experience of the surrounding world. Understanding such systems is an open challenge, which requires knowledge not only of computer science and HCI, but also Psychology, Physiology, Design, Engineering, Cognitive Neuroscience, and Control Theory. This workshop aims to foster discussion between these diverse disciplines and to identify links and synergies in their work, ultimately developing a common understanding of future research directions for systems that intrinsically couple sensing and action.

@inproceedings{Strohmeier_CHI25_SensorimotorDevices, title = {{Sensorimotor Devices: Coupling Sensing and Actuation to Augment Bodily Experience}}, author = {Strohmeier, Paul and Turmo Vidal, Laia and Vega, Gabriela and Reed, Courtney N. and Mazursky, Alex and AliAbbasi, Easa and Tajadura-Jiménez, Ana and Steimle, Jürgen}, year = {2025}, month = apr, booktitle = {{Extended Abstracts of the CHI Conference on Human Factors in Computing Systems}}, location = {Yokohama, Japan}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {CHI EA '25}, doi = {10.1145/3706599.3706735}, url = {https://doi.org/10.1145/3706599.3706735}, } -

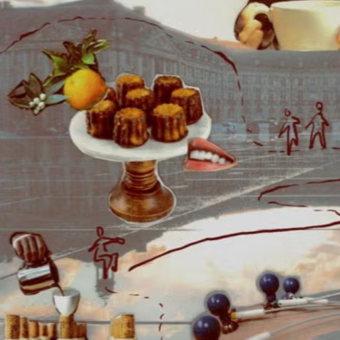

Sensory Data Dialogues: A Somaesthetic Exploration of Bordeaux through Five SensesFiona Bell, Karen Anne Cochrane, Alice C Haynes, and 5 more authorsIn Proceedings of the Nineteenth International Conference on Tangible, Embedded, and Embodied Interaction, Feb 2025

Sensory Data Dialogues: A Somaesthetic Exploration of Bordeaux through Five SensesFiona Bell, Karen Anne Cochrane, Alice C Haynes, and 5 more authorsIn Proceedings of the Nineteenth International Conference on Tangible, Embedded, and Embodied Interaction, Feb 2025The design of interactive systems and digital artefacts often makes use of digital or analog sensory data as a way to "capture" human senses and sensory experiences. Yet, designing for and with sensory data is complex because of our unique, embodied ways of making sense of our somatosensory experiences. Sensory data does not have one prescribed meaning for everyone. We propose a one-day Studio at TEI to start a dialogue about work with sensory data and its representation of human sensory experience. Specifically, we propose a guided walk and series of sensory explorations in Bordeaux to contemplate the interplay between first-person somatosensory experiences and streams of site-specific data from various sensors. By walking and noticing together, this Studio invites participants to engage in a process of creative reflection on their felt experiences, their connection to their surroundings, and their stance within or outside the design community.

@inproceedings{Bell_TEI25_DataDialogues, title = {{Sensory Data Dialogues: A Somaesthetic Exploration of Bordeaux through Five Senses}}, author = {Bell, Fiona and Cochrane, Karen Anne and Haynes, Alice C and Reed, Courtney N. and Teixeira Riggs, Alexandra and Koelle, Marion and Turmo Vidal, Laia and Rallabandi, L. Vineetha}, year = {2025}, month = feb, booktitle = {{Proceedings of the Nineteenth International Conference on Tangible, Embedded, and Embodied Interaction}}, location = {Bordeaux / Talence, France}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {TEI '25}, doi = {10.1145/3689050.3708327}, url = {https://doi.org/10.1145/3689050.3708327}, }

2024

-

Shifting Ambiguity, Collapsing Indeterminacy: Designing with Data as Baradian ApparatusCourtney N. Reed, Adan L. Benito, Franco Caspe, and 1 more authorACM Transactions on Computer-Human Interaction, Dec 2024

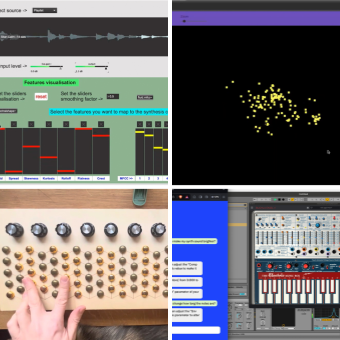

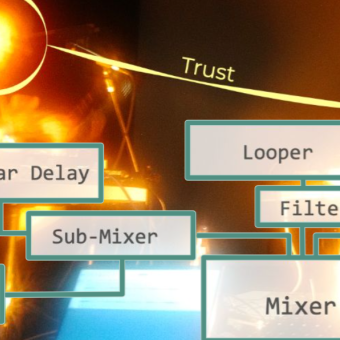

Shifting Ambiguity, Collapsing Indeterminacy: Designing with Data as Baradian ApparatusCourtney N. Reed, Adan L. Benito, Franco Caspe, and 1 more authorACM Transactions on Computer-Human Interaction, Dec 2024This article examines how digital systems designers distil the messiness and ambiguity of the world into concrete data that can be processed by computing systems. Using Karen Barad’s agential realism as a guide, we explore how data is fundamentally entangled with the tools and theories of its measurement. We examine data-enabled artefacts acting as Baradian apparatuses: they do not exist independently of the phenomenon they seek to measure but rather collect and co-produce observations from within their entangled state: the phenomenon and the apparatus co-constitute one another. Connecting Barad’s quantum view of indeterminacy to the prevailing HCI discourse on the opportunities and challenges of ambiguity, we suggest that the very act of trying to stabilise a conceptual interpretation of data within an artefact has the paradoxical effect of amplifying and shifting ambiguity in interaction. We illustrate these ideas through three case studies from our own practices of designing digital musical instruments (DMIs). DMIs necessarily encode symbolic and music-theoretical knowledge as part of their internal operation, even as conceptual knowledge is not their intended outcome. In each case, we explore the nature of the apparatus, what phenomena it co-produces, and where the ambiguity lies to suggest approaches for design using these abstract theoretical frameworks.

@article{Reed_TOCHI_ShiftingAmbiguity, author = {Reed, Courtney N. and Benito, Adan L. and Caspe, Franco and McPherson, Andrew P.}, title = {{Shifting Ambiguity, Collapsing Indeterminacy: Designing with Data as Baradian Apparatus}}, year = {2024}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, volume = {31}, number = {6}, issn = {1073-0516}, url = {https://doi.org/10.1145/3689043}, doi = {10.1145/3689043}, journal = {ACM Transactions on Computer-Human Interaction}, month = dec, articleno = {73}, numpages = {41}, } -

Enculturation and Value Encoding in the Design of Vocal DMIsCourtney N. ReedIn Proceedings of the CHIME Annual Conference 2024, Dec 2024

Enculturation and Value Encoding in the Design of Vocal DMIsCourtney N. ReedIn Proceedings of the CHIME Annual Conference 2024, Dec 2024This paper considers enculturation in vocal DMI design. Two points of inquiry propose further examination of how values and assumptions from HCI and musical practices are encoded in the design of DMIs.

@inproceedings{Reed_CHIME24_VocalEnculturation, title = {{Enculturation and Value Encoding in the Design of Vocal DMIs}}, author = {Reed, Courtney N.}, year = {2024}, month = dec, booktitle = {{Proceedings of the CHIME Annual Conference 2024}}, address = {The Open University, Milton Keynes, United Kingdom}, series = {CHIME '24}, numpages = {1}, url = {https://static1.squarespace.com/static/6227c31a43daf21135453605/t/6738d984449bce18fd86195d/1731778948801/11+Courtney+N.+Reed.pdf}, } -

Ethnographic Exploration of Timbre in Hackathon DesignsCharalampos Saitis, Bleiz Macsen Del Sette, Jordan Shier, and 5 more authorsIn Proceedings of the CHIME Annual Conference 2024, Dec 2024

Ethnographic Exploration of Timbre in Hackathon DesignsCharalampos Saitis, Bleiz Macsen Del Sette, Jordan Shier, and 5 more authorsIn Proceedings of the CHIME Annual Conference 2024, Dec 2024This paper reports a summary account of the Timbre Tools Hackathon: a hackathon that invited audio developers and music technologists to consider and work with timbre through the design of tools that promote a timbre-first approach to digital instrument craft practice—timbre tools. Through ethnographic observation, we identified different approaches towards integrating timbre as an active part of creating tools and technologies in music. These strategies inform future work and the development of tools to assist awareness and exploration of timbre for instrument makers.

@inproceedings{Saitis_CHIME24_TimbreEthno, title = {{Ethnographic Exploration of Timbre in Hackathon Designs}}, author = {Saitis, Charalampos and Del Sette, Bleiz Macsen and Shier, Jordan and Tian, Haokun and Zheng, Shuoyang and Skach, Sophie and Reed, Courtney N. and Ford, Corey}, year = {2024}, month = dec, booktitle = {{Proceedings of the CHIME Annual Conference 2024}}, address = {The Open University, Milton Keynes, United Kingdom}, series = {CHIME '24}, numpages = {1}, url = {https://static1.squarespace.com/static/6227c31a43daf21135453605/t/6734b6d5b882961f8ec04316/1731507925478/6+Charalampos+Saitis+et+al.pdf}, } -

Timbre Tools: Ethnographic Perspectives on Timbre and Sonic Cultures in Hackathon DesignsCharalampos Saitis, Bleiz Macsen Del Sette, Jordan Shier, and 5 more authorsIn Proceedings of the 19th International Audio Mostly Conference: Explorations in Sonic Cultures, Sep 2024

Timbre Tools: Ethnographic Perspectives on Timbre and Sonic Cultures in Hackathon DesignsCharalampos Saitis, Bleiz Macsen Del Sette, Jordan Shier, and 5 more authorsIn Proceedings of the 19th International Audio Mostly Conference: Explorations in Sonic Cultures, Sep 2024Timbre is a nuanced yet abstractly defined concept. Its inherently subjective qualities make it challenging to design and work with. In this paper, we propose to explore the conceptualisation and negotiation of timbre within the creative practice of timbre tool makers. To this end, we hosted a hackathon event and performed an ethnographic study to explore how participants engaged with the notion of timbre and how their conception of timbre was shaped through social interactions and technological encounters. We present individual descriptions of each team’s design process and reflect on our data to identify commonalities in the ways that timbre is understood and informed by sound technologies and their surrounding sonic cultures, e.g., by relating concepts of timbre to metaphors. We further current understanding by offering novel interdisciplinary and multimodal insights into understandings of timbre.

@inproceedings{Saitis_AM24_TimbreTools, author = {Saitis, Charalampos and Del Sette, Bleiz Macsen and Shier, Jordan and Tian, Haokun and Zheng, Shuoyang and Skach, Sophie and Reed, Courtney N. and Ford, Corey}, title = {{Timbre Tools: Ethnographic Perspectives on Timbre and Sonic Cultures in Hackathon Designs}}, year = {2024}, month = sep, isbn = {9798400709685}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3678299.3678322}, doi = {10.1145/3678299.3678322}, booktitle = {{Proceedings of the 19th International Audio Mostly Conference: Explorations in Sonic Cultures}}, pages = {229–244}, numpages = {16}, location = {Milan, Italy}, series = {AM '24}, } -

A framework for modeling performers’ beat-to-beat heart intervals using music features and Interpretation MapsMateusz Soliński, Courtney N. Reed, and Elaine ChewFrontiers in Psychology, Sep 2024

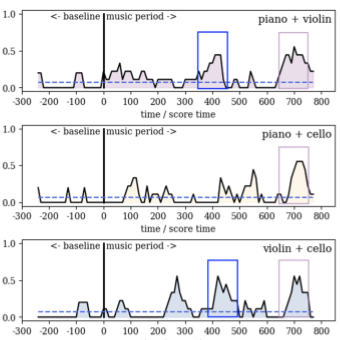

A framework for modeling performers’ beat-to-beat heart intervals using music features and Interpretation MapsMateusz Soliński, Courtney N. Reed, and Elaine ChewFrontiers in Psychology, Sep 2024Objective: Music strongly modulates our autonomic nervous system. This modulation is evident in musicians’ beat-to-beat heart (RR) intervals, a marker of heart rate variability (HRV), and can be related to music features and structures. We present a novel approach to modeling musicians’ RR interval variations, analyzing detailed components within a music piece to extract continuous music features and annotations of musicians’ performance decisions. Methods: A professional ensemble (violinist, cellist, and pianist) performs Schubert’s Trio No. 2, Op. 100, Andante con moto nine times during rehearsals. RR interval series are collected from each musician using wireless ECG sensors. Linear mixed models are used to predict their RR intervals based on music features (tempo, loudness, note density), interpretive choices (Interpretation Map), and a starting factor. Results: The models explain approximately half of the variability of the RR interval series for all musicians, with R-squared = 0.606 (violinist), 0.494 (cellist), and 0.540 (pianist). The features with the strongest predictive values were loudness, climax, moment of concern, and starting factor. Conclusions: The method revealed the relative effects of different music features on autonomic response. For the first time, we show a strong link between an interpretation map and RR interval changes. Modeling autonomic response to music stimuli is important for developing medical and non-medical interventions. Our models can serve as a framework for estimating performers’ physiological reactions using only music information that could also apply to listeners.

@article{Solinski_FPSYG_RRints, title = {{A framework for modeling performers’ beat-to-beat heart intervals using music features and Interpretation Maps}}, volume = {15}, number = {1403599}, issn = {1664-1078}, url = {http://dx.doi.org/10.3389/fpsyg.2024.1403599}, doi = {10.3389/fpsyg.2024.1403599}, journal = {Frontiers in Psychology}, publisher = {Frontiers Media SA}, author = {Soliński, Mateusz and Reed, Courtney N. and Chew, Elaine}, year = {2024}, month = sep, } -

Body Lutherie: Co-Designing a Wearable for Vocal Performance with a Changing BodyRachel Freire, and Courtney N. ReedIn Proceedings of the International Conference on New Interfaces for Musical Expression, Sep 2024

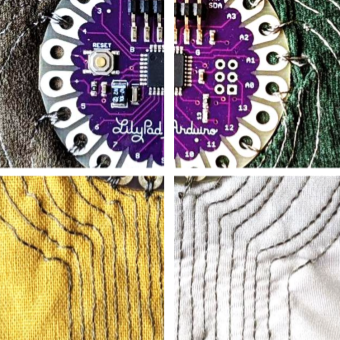

Body Lutherie: Co-Designing a Wearable for Vocal Performance with a Changing BodyRachel Freire, and Courtney N. ReedIn Proceedings of the International Conference on New Interfaces for Musical Expression, Sep 2024Research at NIME has incorporated embodied perspectives from design and HCI communities to explore how instruments and performers shape each other in interaction. Material perspectives also reveal other more-than-human factors’ influence on musical interaction. We propose an additional, currently unaddressed perspective in instrument design: the influence of the body not only the locus of experience, but as a physical, entangled aspect in the more-thanhuman musicking. Proposing a practice of “Body Lutherie”, we explore how digital instrument designers can honour and work with living, dynamic bodies. Our design of a breathbased vocal wearable instrument incorporated uncontrollable aspects of a vocalist’s body and its physical change over different timescales. We distinguish the body in the design process and acknowledge its agency in vocal instrument design. Reflection on our co-design process between vocal pedagogy and eTextile fashion perspectives demonstrates how Body Lutherie can generate empathy and understanding of the body as a collaborator in future instrument design and artistic practice.

@inproceedings{Freire_NIME24_BodyLutherie, title = {{Body Lutherie: Co-Designing a Wearable for Vocal Performance with a Changing Body}}, author = {Freire, Rachel and Reed, Courtney N.}, year = {2024}, month = sep, booktitle = {{Proceedings of the International Conference on New Interfaces for Musical Expression}}, address = {Utrecht, The Netherlands}, numpages = {10}, pages = {117--126}, issn = {2220-4806}, url = {http://nime.org/proceedings/2024/nime2024_18.pdf}, doi = {10.5281/zenodo.13904800}, editor = {Bin, S M Astrid and Reed, Courtney N.}, } -

Explainable AI and MusicNick Bryan-Kinns, Berker Banar, Corey Ford, and 3 more authorsIn Artificial Intelligence for Art Creation and Understanding, Jul 2024

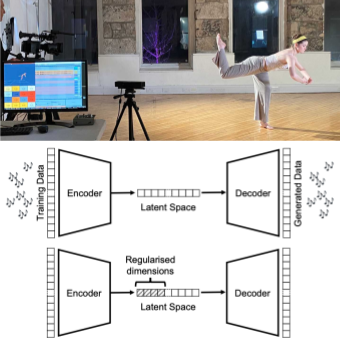

Explainable AI and MusicNick Bryan-Kinns, Berker Banar, Corey Ford, and 3 more authorsIn Artificial Intelligence for Art Creation and Understanding, Jul 2024The field of eXplainable Artificial Intelligence (XAI) has become a hot topic examining how machine learning models such as neural nets and deep learning techniques can be made more understandable to humans. However, there is very little research on XAI for the arts. This chapter explores what XAI might mean for AI and art creation by exploring the potential of XAI for music generation. One hundred AI and music papers are reviewed to illustrate how AI models are being explained, or more often not explained, and to suggest some ways in which we might design XAI systems to better help humans to get an understanding of what an AI model is doing when it generates music. Then the chapter demonstrates how a latent space model for music generation can be made more explainable by extending the MeasureVAE architecture to include explainable attributes in combination with offering real-time music generation. The chapter concludes with four key challenges for XAI for music and the arts more generally: i) the nature of explanation; ii) the effect of AI models, features, and training sets on explanation; iii) user centred design of XAI; iv) Interaction Design of explainable interfaces.

@incollection{BryanKinns_CRCPress_XAI, title = {{Explainable AI and Music}}, author = {Bryan-Kinns, Nick and Banar, Berker and Ford, Corey and Reed, Courtney N. and Zhang, Yixiao and Armitage, Jack}, year = {2024}, month = jul, booktitle = {{Artificial Intelligence for Art Creation and Understanding}}, publisher = {CRC Press}, pages = {1–29}, doi = {10.1201/9781003406273-1}, isbn = {9781003406273}, url = {http://dx.doi.org/10.1201/9781003406273-1}, } -

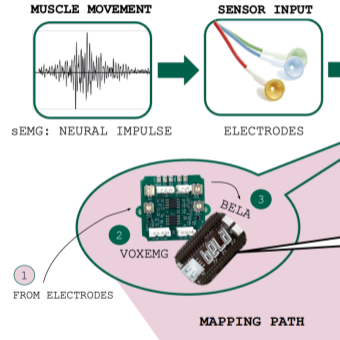

Sonic Entanglements with Electromyography: Between Bodies, Signals, and RepresentationsCourtney N. Reed, Landon Morrison, Andrew P. McPherson, and 2 more authorsIn Proceedings of the 2024 ACM Designing Interactive Systems Conference, Jul 2024

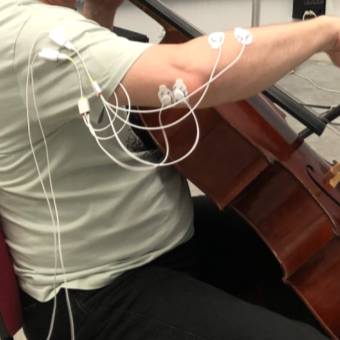

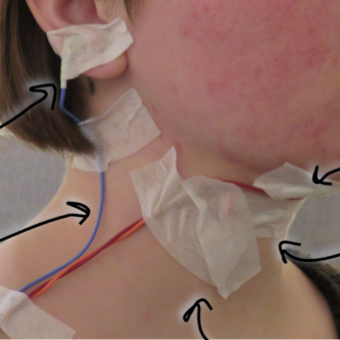

Sonic Entanglements with Electromyography: Between Bodies, Signals, and RepresentationsCourtney N. Reed, Landon Morrison, Andrew P. McPherson, and 2 more authorsIn Proceedings of the 2024 ACM Designing Interactive Systems Conference, Jul 2024This paper investigates sound and music interactions arising from the use of electromyography (EMG) to instrumentalise signals from muscle exertion of the human body. We situate EMG within a family of embodied interaction modalities, where it occupies a middle ground, considered as a “signal from the inside” compared with external observations of the body (e.g., motion capture), but also seen as more volitional than neurological states recorded by brain electroencephalogram (EEG). To understand the messiness of gestural interaction afforded by EMG, we revisit the phenomenological turn in HCI, reading Paul Dourish’s work on the transparency of “ready-to-hand” technologies against the grain of recent posthumanist theories, which offer a performative interpretation of musical entanglements between bodies, signals, and representations. We take music performance as a use case, reporting on the opportunities and constraints posed by EMG in workshop-based studies of vocal, instrumental, and electronic practices. We observe that across our diverse range of musical subjects, they consistently challenged notions of EMG as a transparent tool that directly registered the state of the body, reporting instead that it took on “present-at-hand” qualities, defamiliarising the performer’s own sense of themselves and reconfiguring their embodied practice.

@inproceedings{Reed_DIS24_SonicEntanglements, title = {{Sonic Entanglements with Electromyography: Between Bodies, Signals, and Representations}}, author = {Reed, Courtney N. and Morrison, Landon and McPherson, Andrew P. and Fierro, David and Tanaka, Atau}, year = {2024}, month = jul, booktitle = {{Proceedings of the 2024 ACM Designing Interactive Systems Conference}}, location = {Copenhagen, Denmark}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {DIS '24}, pages = {2691–2707}, numpages = {17}, doi = {10.1145/3643834.3661572}, isbn = {9798400705830}, url = {https://doi.org/10.1145/3643834.3661572}, } -

Design and Experience Tactile Symbols using Continuous and Motion-Coupled VibrationNihar Sabnis, Dennis Wittchen, Gabriela Vega, and 2 more authorsIn Hands-on Demonstrations at the Eurohaptics Conference, Jun 2024

Design and Experience Tactile Symbols using Continuous and Motion-Coupled VibrationNihar Sabnis, Dennis Wittchen, Gabriela Vega, and 2 more authorsIn Hands-on Demonstrations at the Eurohaptics Conference, Jun 2024One application area of vibrotactile haptics has been to create abstract tactile symbols, such as notifications, which requires user’s interpretation. The other area is the rendering of realistic material interactions, such as friction, which provide embodied experiences to the users. The abstract symbols are ren- dered using continuous vibration, whereas vibration coupled to user motion is used to elicit embodied experiences. In our research, we explore how embodied experiences can be used for symbolic mediation, i.e.: how can we use these two vibration types to design hybrid tactile symbols? In this demo, we invite visitors to explore a set of such hybrid tactile symbols created by experts in a user study [1]. We further invite visitors to design tactile symbols themselves using a graphical user interface and experience them on a set of tangible user interfaces. We expect the demo to take 3–5 minutes.

@inproceedings{Sabnis_EH24_MotionVibration, title = {{Design and Experience Tactile Symbols using Continuous and Motion-Coupled Vibration}}, author = {Sabnis, Nihar and Wittchen, Dennis and Vega, Gabriela and Reed, Courtney N. and Strohmeier, Paul}, year = {2024}, month = jun, booktitle = {{Hands-on Demonstrations at the Eurohaptics Conference}}, location = {Lille, France}, } -

Auditory imagery ability influences accuracy when singing with altered auditory feedbackCourtney N. Reed, Marcus Pearce, and Andrew McPhersonMusicae Scientiae, Feb 2024

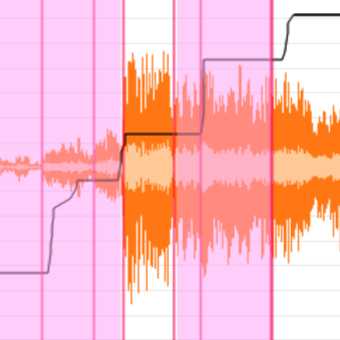

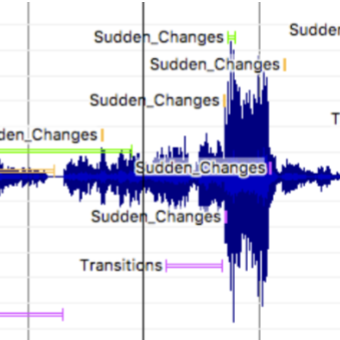

Auditory imagery ability influences accuracy when singing with altered auditory feedbackCourtney N. Reed, Marcus Pearce, and Andrew McPhersonMusicae Scientiae, Feb 2024In this preliminary study, we explored the relationship between auditory imagery ability and the maintenance of tonal and temporal accuracy when singing and audiating with altered auditory feedback (AAF). Actively performing participants sang and audiated (sang mentally but not aloud) a self-selected piece in AAF conditions, including upward pitch-shifts and delayed auditory feedback (DAF), and with speech distraction. Participants with higher self-reported scores on the Bucknell Auditory Imagery Scale (BAIS) produced a tonal reference that was less disrupted by pitch shifts and speech distraction than musicians with lower scores. However, there was no observed effect of BAIS score on temporal deviation when singing with DAF. Auditory imagery ability was not related to the experience of having studied music theory formally, but was significantly related to the experience of performing. The significant effect of auditory imagery ability on tonal reference deviation remained even after partialling out the effect of experience of performing. The results indicate that auditory imagery ability plays a key role in maintaining an internal tonal center during singing but has at most a weak effect on temporal consistency. In this article, we outline future directions in understanding the multifaceted role of auditory imagery ability in singers’ accuracy and expression.

@article{Reed_MSX_AAFVocalAccuracy, title = {{Auditory imagery ability influences accuracy when singing with altered auditory feedback}}, author = {Reed, Courtney N. and Pearce, Marcus and McPherson, Andrew}, year = {2024}, month = feb, journal = {Musicae Scientiae}, publisher = {SAGE Publications}, volume = {28}, number = {3}, pages = {478–501}, doi = {10.1177/10298649231223077}, issn = {2045-4147}, url = {http://dx.doi.org/10.1177/10298649231223077}, } -

Base and Stitch: Evaluating eTextile Interfaces from a Material-Centric ViewL. Vineetha Rallabandi, Alice C. Haynes, Courtney N. Reed, and 1 more authorIn Proceedings of the Eighteenth International Conference on Tangible, Embedded, and Embodied Interaction, Feb 2024

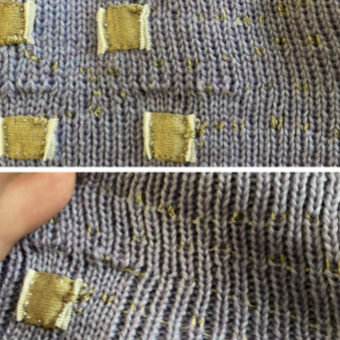

Base and Stitch: Evaluating eTextile Interfaces from a Material-Centric ViewL. Vineetha Rallabandi, Alice C. Haynes, Courtney N. Reed, and 1 more authorIn Proceedings of the Eighteenth International Conference on Tangible, Embedded, and Embodied Interaction, Feb 2024Fabrics are seen as the foundation for e-textile interfaces but contribute their own tactile properties to interaction. We examine the role of fabrics in gestural interaction from a novel, textile-focused view. We replicated an eTextile sensor and interface for rolling and pinching gestures on four different fabric swatches and invited 6 participants, including both designers and lay-users, to interact with them. Using a semi-structured interview, we examined their interaction with the materials and how they perceived movement and feedback from the textile sensor and a visual GUI. We analyzed participants’ responses using a joint, reflexive thematic analysis and propose two key considerations for research in e-textile design: 1) Both sensor and fabric contribute their own, inseparable materiality and 2) Wearable sensing must be evaluated with respect to culturally situated bodies and orientation. Expanding on material-oriented design research, we proffer that the evaluation of eTextiles must also be material-led and cannot be decontextualized and must be grounded within a soma-aware and situated context.

@inproceedings{Rallabandi_TEI24_BaseStitch, title = {{Base and Stitch: Evaluating eTextile Interfaces from a Material-Centric View}}, author = {Rallabandi, L. Vineetha and Haynes, Alice C. and Reed, Courtney N. and Strohmeier, Paul}, year = {2024}, month = feb, booktitle = {{Proceedings of the Eighteenth International Conference on Tangible, Embedded, and Embodied Interaction}}, location = {Cork, Ireland}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {TEI '24}, articleno = {15}, numpages = {13}, doi = {10.1145/3623509.3633363}, isbn = {9798400704024}, url = {https://doi.org/10.1145/3623509.3633363}, } -

Liminal Space: A Performance with RaveNETRachel Freire, Valentin Martinez-Missir, Courtney N. Reed, and 1 more authorIn Proceedings of the Eighteenth International Conference on Tangible, Embedded, and Embodied Interaction, Feb 2024

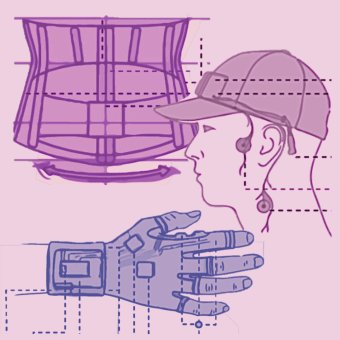

Liminal Space: A Performance with RaveNETRachel Freire, Valentin Martinez-Missir, Courtney N. Reed, and 1 more authorIn Proceedings of the Eighteenth International Conference on Tangible, Embedded, and Embodied Interaction, Feb 2024We present our musical performance exploration of liminal spaces, which focuses on the interconnected physicality of bodies in music, using biosignals and gestural, movement-based interaction to shape live performances in novel ways. Physical movement is important in structuring performance, providing cues across musical ensembles, and non-verbally informing other musicians of intention. This is especially true for improvised work. Our performance involves the use of our musicking bodies to modulate audio signals. Three bespoke wearable nodes modulate the performance through control voltages (CV) and interface with specific technical aspects of our instruments and techniques: 1) an “anti-corset” that measures the expansion and resistance of Reed’s abdomen while singing, 2) an augmented glove that assists Strohmeier’s bass/guitar signal routing across his pedal board and modular setup, and 3) a cap-like device that captures Martinez-Missir’s subtle facial expressions as he manipulates his modular synthesizer and drum machine setup. Through these performances we explore the notion of control in musical improvised performance, the interconnectedness and communications between our ensemble as we learn to collaborate and interpret each others’ bodies in this novel interaction.

@inproceedings{Freire_TEI24_RaveNETPerf, title = {{Liminal Space: A Performance with RaveNET}}, author = {Freire, Rachel and Martinez-Missir, Valentin and Reed, Courtney N. and Strohmeier, Paul}, year = {2024}, month = feb, booktitle = {{Proceedings of the Eighteenth International Conference on Tangible, Embedded, and Embodied Interaction}}, location = {Cork, Ireland}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {TEI '24}, articleno = {105}, numpages = {4}, doi = {10.1145/3623509.3635337}, isbn = {9798400704024}, url = {https://doi.org/10.1145/3623509.3635337}, } -

RaveNET: Connecting People and Exploring Liminal Space through Wearable Networks in Music PerformanceRachel Freire, Valentin Martinez-Missir, Courtney N. Reed, and 1 more authorIn Proceedings of the Eighteenth International Conference on Tangible, Embedded, and Embodied Interaction, Feb 2024

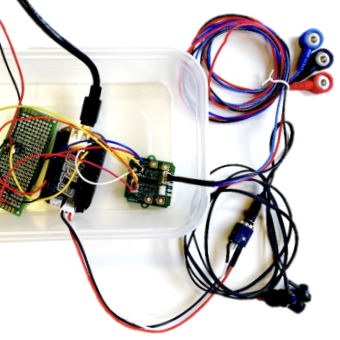

RaveNET: Connecting People and Exploring Liminal Space through Wearable Networks in Music PerformanceRachel Freire, Valentin Martinez-Missir, Courtney N. Reed, and 1 more authorIn Proceedings of the Eighteenth International Conference on Tangible, Embedded, and Embodied Interaction, Feb 2024RaveNET connects people to music, enabling musicians to modulate sound using signals produced by their own bodies or the bodies of others. We present three wearable prototype nodes in an inaugural RaveNET performance: Bones, an anti-corset, uses capacitive sensing to detect stretch as the singer breathes. Tendons, a half-glove, measures galvanic skin response, pulse, and movement of the bass player’s hands. Veins, a cap with electrodes for surface electromyography, captures the facial expressions of the drum machine operator. These signals are filtered, normalized, and amplified to control voltage levels to modulate sound. Together, musicians and nodes form RaveNET and engage with shared liminal experiences. In designing these wearables and evaluating them in performance, we reflect on our creative processes, spaces between our different bodies, our presence and control within the network, and how this made us adapt our movements in order to be noticed and heard.

@inproceedings{Freire_TEI24_RaveNETWiP, title = {{RaveNET: Connecting People and Exploring Liminal Space through Wearable Networks in Music Performance}}, author = {Freire, Rachel and Martinez-Missir, Valentin and Reed, Courtney N. and Strohmeier, Paul}, year = {2024}, month = feb, booktitle = {{Proceedings of the Eighteenth International Conference on Tangible, Embedded, and Embodied Interaction}}, location = {Cork, Ireland}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {TEI '24}, articleno = {89}, numpages = {8}, doi = {10.1145/3623509.3635270}, isbn = {9798400704024}, url = {https://doi.org/10.1145/3623509.3635270}, }

2023

-

A Guide to Evaluating the Experience of Media and Arts TechnologyNick Bryan-Kinns, and Courtney N. ReedIn Creating Digitally. Intelligent Systems Reference Library, Dec 2023

A Guide to Evaluating the Experience of Media and Arts TechnologyNick Bryan-Kinns, and Courtney N. ReedIn Creating Digitally. Intelligent Systems Reference Library, Dec 2023Evaluation is essential to understanding the value that digital creativity brings to people’s experience, for example in terms of their enjoyment, creativity, and engagement. There is a substantial body of research on how to design and evaluate interactive arts and digital creativity applications. There is also extensive Human-Computer Interaction (HCI) literature on how to evaluate user interfaces and user experiences. However, it can be difficult for artists, practitioners, and researchers to navigate such a broad and disparate collection of materials when considering how to evaluate technology they create that is at the intersection of art and interaction. This chapter provides a guide to designing robust user studies of creative applications at the intersection of art, technology and interaction, which we refer to as Media and Arts Technology (MAT). We break MAT studies down into two main kinds: proof-of-concept and comparative studies. As MAT studies are exploratory in nature, their evaluation requires the collection and analysis of both qualitative data such as free text questionnaire responses, interviews, and observations, and also quantitative data such as questionnaires, number of interactions, and length of time spent interacting. This chapter draws on over 20 years of experience of designing and evaluating novel interactive systems to provide a concrete template on how to structure a study to evaluate MATs that is both rigorous and repeatable, and how to report study results that are publishable and accessible to a wide readership in art and science communities alike.

@incollection{BryanKinns_CreatingDigitally, title = {{A Guide to Evaluating the Experience of Media and Arts Technology}}, author = {Bryan-Kinns, Nick and Reed, Courtney N.}, year = {2023}, month = dec, booktitle = {{Creating Digitally. Intelligent Systems Reference Library}}, publisher = {Springer International Publishing}, pages = {267–300}, doi = {10.1007/978-3-031-31360-8_10}, isbn = {9783031313608}, issn = {1868-4408}, url = {http://dx.doi.org/10.1007/978-3-031-31360-8_10}, vol = {241}, } -

Do You Hear What I Hear?Simin Yang, Mathieu Barthet, Courtney N. Reed, and 1 more authorComputer, Dec 2023

Do You Hear What I Hear?Simin Yang, Mathieu Barthet, Courtney N. Reed, and 1 more authorComputer, Dec 2023This installment of Computer’s series highlighting the work published in IEEE Computer Society journals comes from IEEE Transactions on Affective Computing. Musical performance is often described as expressing emotion. However, the human perception of emotion in music is not well understood. The studies by Yang et al. examine listeners’ emotional perception over time to a performance of a single musical piece experienced in live concert conditions, and in the lab, through video recordings. The authors aimed to find out the following: What level of agreement exists between listeners of the same performance? How are perceived emotions related to the semantic features of the music (expressible in linguistic terms) and to machine-extractable music features? What aspects of the music itself and of the listener, like music expertise, influence perceived emotions?

@article{Yang_Computer_Hear, title = {{Do You Hear What I Hear?}}, author = {Yang, Simin and Barthet, Mathieu and Reed, Courtney N. and Chew, Elaine}, year = {2023}, month = dec, journal = {Computer}, publisher = {Institute of Electrical and Electronics Engineers (IEEE)}, volume = {56}, number = {12}, pages = {4–6}, doi = {10.1109/mc.2023.3315470}, issn = {1558-0814}, url = {http://dx.doi.org/10.1109/MC.2023.3315470}, } -

Triangle Simplex Plots for Representing and Classifying Heart Rate VariabilityMateusz Soliński, Courtney N. Reed, and Elaine ChewIn Proceedings of the 2023 Computing in Cardiology Conference (CinC), Oct 2023

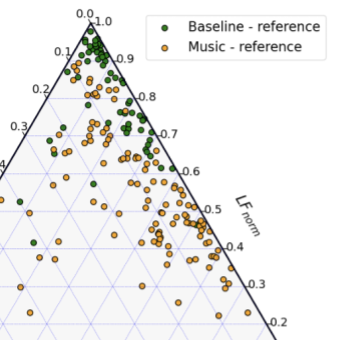

Triangle Simplex Plots for Representing and Classifying Heart Rate VariabilityMateusz Soliński, Courtney N. Reed, and Elaine ChewIn Proceedings of the 2023 Computing in Cardiology Conference (CinC), Oct 2023Simplex plots afford barycentric mapping and visualisation of the ratio of three variables, summed to a constant, as positions in an equilateral triangle (2-simplex); for instance, time distribution in three-interval musical rhythms. We propose a novel use of simplex plots to visualise the balance of autonomic variables and classification of autonomic states during baseline and music performance. RR interval series extracted from electrocardiographic (ECG) traces were collected from a musical trio (pianist, violinist, cellist) in a baseline (5 min) and music performance (\sim10 min) condition. Schubert’s Trio Op. 100, \textitAndante con moto was performed in nine rehearsal sessions over five days. Each RR interval series’ very low (VLF), low (LF), and high (HF) frequency component power values, calculated in 30 sec windows (hop size 15 sec), were normalised to 1 and visualised in triangle simplex plots. Spectral clustering was used to cluster data points for baseline and music conditions. We correlated the accuracy between the clustered and true values. Strong negative correlation was observed for the violinist (r = –0.80, p ≤.01, accuracy range: [0.64, 0.94]) and pianist (r = –0.62, p = .073, [0.64, 0.80]), suggesting adaptation of their cardiac response (reduction between baseline and performance) over the performances; a weakly negative, non-significant correlation was observed for the cellist (r = –0.23, p = .545, [0.50, 0.61]), indicating similarity between baseline and performance over time. Using simplex plots, we were able to effectively represent VLF, LF and HF ratios and track changes in autonomic response over a series of music rehearsals to observe autonomic states and changes over time.

@inproceedings{Solinski_CinC23_TriangleSimplex, title = {{Triangle Simplex Plots for Representing and Classifying Heart Rate Variability}}, author = {Soliński, Mateusz and Reed, Courtney N. and Chew, Elaine}, year = {2023}, month = oct, booktitle = {{Proceedings of the 2023 Computing in Cardiology Conference (CinC)}}, location = {Atlanta, GA, USA}, } -

Time Delay Stability Analysis of Pairwise Interactions Amongst Ensemble-Listener RR Intervals and Expressive Music FeaturesMateusz Soliński, Courtney N. Reed, and Elaine ChewIn Proceedings of 2023 Computing in Cardiology Conference (CinC), Oct 2023

Time Delay Stability Analysis of Pairwise Interactions Amongst Ensemble-Listener RR Intervals and Expressive Music FeaturesMateusz Soliński, Courtney N. Reed, and Elaine ChewIn Proceedings of 2023 Computing in Cardiology Conference (CinC), Oct 2023Time Delay Stability (TDS) can reveal physiological function and states in networked organs. Here, we introduce a novel application of TDS to a musical setting to study interactions between RR intervals of ensemble musicians and a listener, and music properties. Three musicians performed a movement from Schubert’s Trio Op. 100 nine times in the company of one listener. Their RR intervals were collected during baseline (5 min, silence) and performances (\sim10 min each). Loudness and tempo were extracted from recorded music audio. Regions of stable optimal time delay were identified during baseline and music, shuffled data, and data pairs from incongruent recordings. Bootstrapping was employed to obtain mean TDS probabilities (calculated based on all performances). A significant difference in mean TDS probability between music and baseline is observed for all musician pairs (p<.001) and for cello-listener (p=.025); mean TDS probability being greater during music. A significant decrease in mean TDS probability was observed for piano-violin (p<.001), violin-tempo (p=.045), and cello-tempo (p<.001) for incongruent pairs. The highest inter-musician TDS probabilities were observed in musically tense sections: the final climax before the music dies down for the ending and mid piece in a suspenseful swell. This framework offers a promising way to track dynamic RR interval interactions between people engaged in a shared activity, and, in this musical activity, between the people and music properties.

@inproceedings{Solinski_CinC23_TRDanalysis, title = {{Time Delay Stability Analysis of Pairwise Interactions Amongst Ensemble-Listener RR Intervals and Expressive Music Features}}, author = {Soliński, Mateusz and Reed, Courtney N. and Chew, Elaine}, year = {2023}, month = oct, booktitle = {{Proceedings of 2023 Computing in Cardiology Conference (CinC)}}, location = {Atlanta, GA, USA}, } -

Agential Instruments Design WorkshopJack Armitage, Victor Shepardson, Nicola Privato, and 10 more authorsIn Proceedings of the International Conference on AI and Music Creativity, Aug 2023

Agential Instruments Design WorkshopJack Armitage, Victor Shepardson, Nicola Privato, and 10 more authorsIn Proceedings of the International Conference on AI and Music Creativity, Aug 2023Physical and gestural musical instruments that take advantage of artificial intelligence and machine learning to explore instrumental agency are becoming more accessible due to the development of new tools and workflows specialised for mobility, portability, efficiency and low latency. This full-day, hands-on workshop will provide all of these tools to participants along with support from their creators, enabling rapid creative exploration of their applications a musical instrument design.

@inproceedings{Armitage_AIMC23_AgentialInstruments, title = {{Agential Instruments Design Workshop}}, author = {Armitage, Jack and Shepardson, Victor and Privato, Nicola and Pelinski, Teresa and Temprano, Adan L. Benito and Wolstanholme, Lewis and Martelloni, Andrea and Caspe, Franco Santiago and Reed, Courtney N. and Skach, Sophie and Diaz, Rodrigo and O'Brien, Sean Patrick and Shier, Jordie}, year = {2023}, month = aug, booktitle = {{Proceedings of the International Conference on AI and Music Creativity}}, location = {Brighton, UK}, } -

Querying Experience with Musical InteractionCourtney N. Reed, Eevee Zayas-Garin, and Andrew McPhersonIn Proceedings of the International Conference on New Interfaces for Musical Expression, May 2023

Querying Experience with Musical InteractionCourtney N. Reed, Eevee Zayas-Garin, and Andrew McPhersonIn Proceedings of the International Conference on New Interfaces for Musical Expression, May 2023With this workshop, we aim to bring together researchers with the common interest of querying, articulating and understanding experience in the context of New Interfaces for Musical Expression, and to jointly identify challenges, methodologies and opportunities in this space. Furthermore, we hope it serves as a platform for strengthening the community of researchers working with qualitative and phenomenological methods around the design of DMIs and HCI applied to musical interaction.

@inproceedings{Reed_NIME23_QueryingExperience, title = {{Querying Experience with Musical Interaction}}, author = {Reed, Courtney N. and Zayas-Garin, Eevee and McPherson, Andrew}, year = {2023}, month = may, booktitle = {{Proceedings of the International Conference on New Interfaces for Musical Expression}}, location = {Mexico City, Mexico}, } -

Negotiating Experience and Communicating Information Through Abstract MetaphorCourtney N. Reed, Paul Strohmeier, and Andrew P. McPhersonIn Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Apr 2023

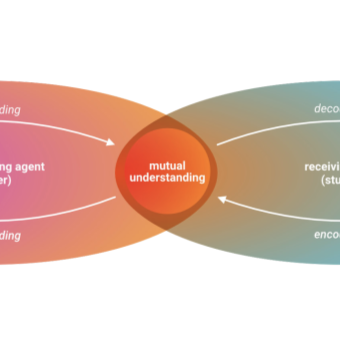

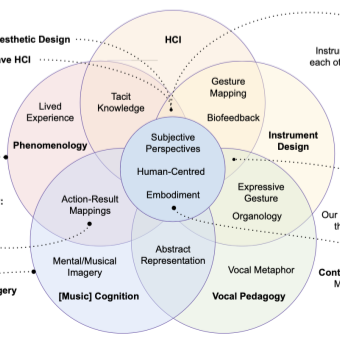

Negotiating Experience and Communicating Information Through Abstract MetaphorCourtney N. Reed, Paul Strohmeier, and Andrew P. McPhersonIn Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Apr 2023An implicit assumption in metaphor use is that it requires grounding in a familiar concept, prominently seen in the popular Desktop Metaphor. In human-to-human communication, however, abstract metaphors, without such grounding, are often used with great success. To understand when and why metaphors work, we present a case study of metaphor use in voice teaching. Voice educators must teach about subjective, sensory experiences and rely on abstract metaphor to express information about unseen and intangible processes inside the body. We present a thematic analysis of metaphor use by 12 voice teachers. We found that metaphor works not because of strong grounding in the familiar, but because of its ambiguity and flexibility, allowing shared understanding between individual lived experiences. We summarise our findings in a model of metaphor-based communication. This model can be used as an analysis tool within the existing taxonomies of metaphor in user interaction for better understanding why metaphor works in HCI. It can also be used as a design resource for thinking about metaphor use and abstracting metaphor strategies from both novel and existing designs.

@inproceedings{Reed_CHI23_VocalMetaphor, title = {{Negotiating Experience and Communicating Information Through Abstract Metaphor}}, author = {Reed, Courtney N. and Strohmeier, Paul and McPherson, Andrew P.}, year = {2023}, month = apr, booktitle = {{Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems}}, location = {Hamburg, Germany}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {CHI '23}, articleno = {185}, numpages = {16}, doi = {10.1145/3544548.3580700}, isbn = {9781450394215}, url = {https://doi.org/10.1145/3544548.3580700}, } -

Tactile Symbols with Continuous and Motion-Coupled Vibration: An Exploration of Using Embodied Experiences for Hermeneutic DesignNihar Sabnis, Dennis Wittchen, Gabriela Vega, and 2 more authorsIn Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Apr 2023

Tactile Symbols with Continuous and Motion-Coupled Vibration: An Exploration of Using Embodied Experiences for Hermeneutic DesignNihar Sabnis, Dennis Wittchen, Gabriela Vega, and 2 more authorsIn Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Apr 2023With most digital devices, vibrotactile feedback consists of rhythmic patterns of continuous vibration. In contrast, when interacting with physical objects, we experience many of their material properties through vibration which is not continuous, but dynamically coupled to our actions. We assume the first style of vibration to lead to hermeneutic mediation, while the second style leads to embodied mediation. What if both types of mediation could be used to design tactile symbols? To investigate this, five haptic experts designed tactile symbols using continuous and motion-coupled vibration. Experts were interviewed to understand their symbols and design approach. A thematic analysis revealed themes showing that lived experience and affective qualities shaped design choices, that experts optimized for passive or active symbols, and that they considered context as part of the design. Our study suggests that adding embodied experiences as a design resource changes how participants think of tactile symbol design, thus broadening the scope of the symbol by design for context, and expanding their affective repertoire as changing the type of vibration influences perceived valence and arousal.

@inproceedings{Sabnis_CHI23_TactileSymbols, title = {{Tactile Symbols with Continuous and Motion-Coupled Vibration: An Exploration of Using Embodied Experiences for Hermeneutic Design}}, author = {Sabnis, Nihar and Wittchen, Dennis and Vega, Gabriela and Reed, Courtney N. and Strohmeier, Paul}, year = {2023}, month = apr, booktitle = {{Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems}}, location = {Hamburg, Germany}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {CHI '23}, articleno = {688}, numpages = {19}, doi = {10.1145/3544548.3581356}, isbn = {9781450394215}, url = {https://doi.org/10.1145/3544548.3581356}, } -

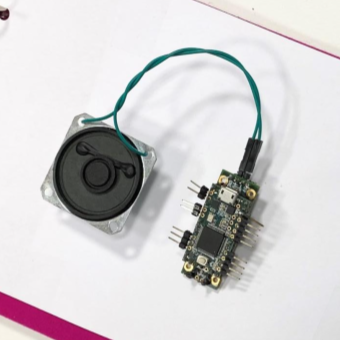

Haptic Servos: Self-Contained Vibrotactile Rendering System for Creating or Augmenting Material ExperiencesCourtney N. Reed*, Nihar Sabnis*, Dennis Wittchen*, and 3 more authorsIn Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Apr 2023

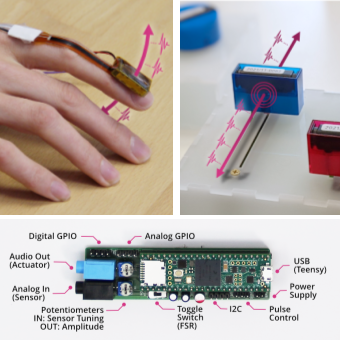

Haptic Servos: Self-Contained Vibrotactile Rendering System for Creating or Augmenting Material ExperiencesCourtney N. Reed*, Nihar Sabnis*, Dennis Wittchen*, and 3 more authorsIn Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Apr 2023When vibrations are synchronized with our actions, we experience them as material properties. This has been used to create virtual experiences like friction, counter-force, compliance, or torsion. Implementing such experiences is non-trivial, requiring high temporal resolution in sensing, high fidelity tactile output, and low latency. To make this style of haptic feedback more accessible to non-domain experts, we present Haptic Servos: self-contained haptic rendering devices which encapsulate all timing-critical elements. We characterize Haptic Servos’ real-time performance, showing the system latency is <5 ms. We explore the subjective experiences they can evoke, highlighting that qualitatively distinct experiences can be created based on input mapping, even if stimulation parameters and algorithm remain unchanged. A workshop demonstrated that users new to Haptic Servos require approximately ten minutes to set up a basic haptic rendering system. Haptic Servos are open source, we invite others to copy and modify our design.

@inproceedings{Sabnis_CHI23_HapticServos, title = {{Haptic Servos: Self-Contained Vibrotactile Rendering System for Creating or Augmenting Material Experiences}}, author = {Reed*, Courtney N. and Sabnis*, Nihar and Wittchen*, Dennis and Pourjafarian, Narjes and Steimle, J\"{u}rgen and Strohmeier, Paul}, year = {2023}, month = apr, booktitle = {{Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems}}, location = {Hamburg, Germany}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {CHI '23}, articleno = {522}, numpages = {17}, doi = {10.1145/3544548.3580716}, isbn = {9781450394215}, url = {https://doi.org/10.1145/3544548.3580716}, } -

As the Luthiers Do: Designing with a Living, Growing, Changing Body-MaterialCourtney N. ReedIn ACM CHI Workshop on Body X Materials, Apr 2023

As the Luthiers Do: Designing with a Living, Growing, Changing Body-MaterialCourtney N. ReedIn ACM CHI Workshop on Body X Materials, Apr 2023Through soma-centric research, we see the different interaction roles of our bodies: they are the locus of our experience, a conduit for our expression and engagement, a sensor of feedback in the world, and a collaborator in our interaction with it. More" traditional" examinations of the body might look at control over it; for instance, in my research around vocal embodiment, I see many teachers and practitioners alike talking about how we can maintain control over the body. However, bodies are living, inconsistent, and typically weird. In reality, we do not have as much control over them as we would like or think we do. In this position paper, I will touch on my research around vocal physiology and sonified and vibrotactile feedback as I frame our role in a new light—designers as Body Luthiers, who must address the body as a material with inconsistencies, flaws, and variability, and work with it as a partner, embracing its uniqueness and changeability.

@inproceedings{Reed_CHI23_BodyLutherie, title = {{As the Luthiers Do: Designing with a Living, Growing, Changing Body-Material}}, author = {Reed, Courtney N.}, year = {2023}, month = apr, booktitle = {{ACM CHI Workshop on Body X Materials}}, location = {Hamburg, Germany}, } -

Designing Interactive Shoes for Tactile Augmented RealityDennis Wittchen, Valentin Martinez-Missir, Sina Mavali, and 3 more authorsIn Proceedings of the Augmented Humans International Conference 2023, Mar 2023

Designing Interactive Shoes for Tactile Augmented RealityDennis Wittchen, Valentin Martinez-Missir, Sina Mavali, and 3 more authorsIn Proceedings of the Augmented Humans International Conference 2023, Mar 2023Augmented Footwear has become an increasingly common research area. However, as this is a comparatively new direction in HCI, researchers and designers are not able to build upon common platforms. We discuss the design space of shoes for augmented tactile reality, focussing on physiological and biomechanical factors as well as technical considerations. We present an open source example implementation from this space, intended as an experimental platform for vibrotactile rendering and tactile AR and provide details on experiences that could be evoked with such a system. Anecdotally, the new prototype provided experiences of material properties like compliance, as well as altered perception of their movements and agency. We intend our work to lower the barrier of entry for new researchers and to support the field of tactile rendering in footwear in general by making it easier to compare results between studies.

@inproceedings{Wittchen_AHs23_AugmentedShoes, title = {{Designing Interactive Shoes for Tactile Augmented Reality}}, author = {Wittchen, Dennis and Martinez-Missir, Valentin and Mavali, Sina and Sabnis, Nihar and Reed, Courtney N. and Strohmeier, Paul}, year = {2023}, month = mar, booktitle = {{Proceedings of the Augmented Humans International Conference 2023}}, location = {Glasgow, United Kingdom}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {AHs '23}, pages = {1–14}, numpages = {14}, doi = {10.1145/3582700.3582728}, isbn = {9781450399845}, url = {https://doi.org/10.1145/3582700.3582728}, } -

The Body as Sound: Unpacking Vocal Embodiment through Auditory BiofeedbackCourtney N. Reed, and Andrew P. McPhersonIn Proceedings of the Seventeenth International Conference on Tangible, Embedded, and Embodied Interaction, Feb 2023

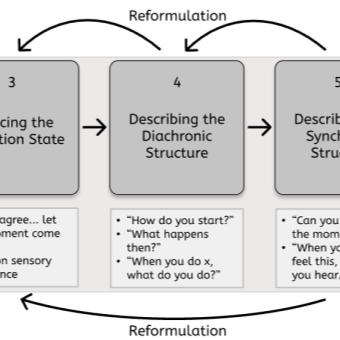

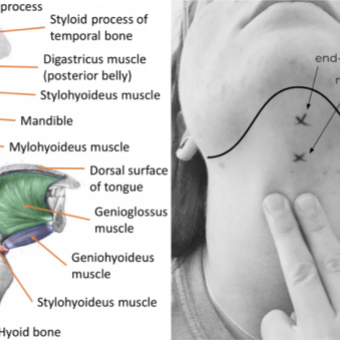

The Body as Sound: Unpacking Vocal Embodiment through Auditory BiofeedbackCourtney N. Reed, and Andrew P. McPhersonIn Proceedings of the Seventeenth International Conference on Tangible, Embedded, and Embodied Interaction, Feb 2023Multi-sensory experiences underpin embodiment, whether with the body itself or technological extensions of it. Vocalists experience intensely personal embodiment, as vocalisation has few outwardly visible effects and kinaesthetic sensations occur largely within the body, rather than through external touch. We explored this embodiment using a probe which sonified laryngeal muscular movements and provided novel auditory feedback to two vocalists over a month-long period. Somatic and micro-phenomenological approaches revealed that the vocalists understand their physiology through its sound, rather than awareness of the muscular actions themselves. The feedback shaped the vocalists’ perceptions of their practice and revealed a desire for reassurance about exploration of one’s body when the body-as-sound understanding was disrupted. Vocalists experienced uncertainty and doubt without affirmation of perceived correctness. This research also suggests that technology is viewed as infallible and highlights expectations that exist about its ability to dictate success, even when we desire or intend to explore.

@inproceedings{Reed_TEI23_BodyAsSound, title = {{The Body as Sound: Unpacking Vocal Embodiment through Auditory Biofeedback}}, author = {Reed, Courtney N. and McPherson, Andrew P.}, year = {2023}, month = feb, booktitle = {{Proceedings of the Seventeenth International Conference on Tangible, Embedded, and Embodied Interaction}}, location = {Warsaw, Poland}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {TEI '23}, articleno = {7}, numpages = {15}, doi = {10.1145/3569009.3572738}, isbn = {9781450399777}, url = {https://doi.org/10.1145/3569009.3572738}, } -

Being Meaningful: Weaving Soma-Reflective Technological Mediations into the Fabric of Daily LifeAlice Haynes, Courtney N. Reed, Charlotte Nordmoen, and 1 more authorIn Proceedings of the Seventeenth International Conference on Tangible, Embedded, and Embodied Interaction, Feb 2023

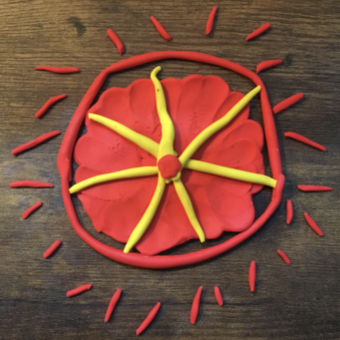

Being Meaningful: Weaving Soma-Reflective Technological Mediations into the Fabric of Daily LifeAlice Haynes, Courtney N. Reed, Charlotte Nordmoen, and 1 more authorIn Proceedings of the Seventeenth International Conference on Tangible, Embedded, and Embodied Interaction, Feb 2023A one-size-fits-all design mentality, rooted in objective efficiency, is ubiquitous in our mass-production society. This can negate peoples’ experiences, bodies, and narratives. Ongoing HCI research proposes design for meaningful relations; but for many researchers, the practical implementation of these philosophies remains somewhat intangible. In this Studio, we playfully tackle this space by engaging with the nuances of soft, flexible, and organic materials, collectively designing probes to embrace plurality, embody meaning, and encourage soma-reflection. Focusing on materiality and practices from e-textiles, soft robotics, and biomaterials research, we address technology’s role as a mediator of our experiences and determiner of our realities. The processes and probes developed in this Studio will serve as an experiential manifesto, providing practitioners with tools to deepen their own practices for designing soma-reflective tangible and embodied interaction. The Studio will form the first steps for ongoing collaboration, focusing on bespoke design and curation of meaningful, personal relationships.

@inproceedings{Haynes_TEI23_BeingMeaningful, title = {{Being Meaningful: Weaving Soma-Reflective Technological Mediations into the Fabric of Daily Life}}, author = {Haynes, Alice and Reed, Courtney N. and Nordmoen, Charlotte and Skach, Sophie}, year = {2023}, month = feb, booktitle = {{Proceedings of the Seventeenth International Conference on Tangible, Embedded, and Embodied Interaction}}, location = {Warsaw, Poland}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {TEI '23}, articleno = {68}, numpages = {5}, doi = {10.1145/3569009.3571844}, isbn = {9781450399777}, url = {https://doi.org/10.1145/3569009.3571844}, } -

Imagining & Sensing: Understanding and Extending the Vocalist-Voice Relationship Through Biosignal FeedbackCourtney N. ReedPhD Computer Science, Queen Mary University of London, Feb 2023

Imagining & Sensing: Understanding and Extending the Vocalist-Voice Relationship Through Biosignal FeedbackCourtney N. ReedPhD Computer Science, Queen Mary University of London, Feb 2023The voice is body and instrument. Third-person interpretation of the voice by listeners, vocal teachers, and digital agents is centred largely around audio feedback. For a vocalist, physical feedback from within the body provides an additional interaction. The vocalist’s understanding of their multi-sensory experiences is through tacit knowledge of the body. This knowledge is difficult to articulate, yet awareness and control of the body are innate. In the ever-increasing emergence of technology which quantifies or interprets physiological processes, we must remain conscious also of embodiment and human perception of these processes. Focusing on the vocalist-voice relationship, this thesis expands knowledge of human interaction and how technology influences our perception of our bodies. To unite these different perspectives in the vocal context, I draw on mixed methods from cognitive science, psychology, music information retrieval, and interactive system design. Objective methods such as vocal audio analysis provide a third-person observation. Subjective practices such as micro-phenomenology capture the experiential, first-person perspectives of the vocalists themselves. Quantitative-qualitative blend provides details not only on novel interaction, but also an understanding of how technology influences existing understanding of the body.

@phdthesis{Reed_PhD_ImaginingSensing, title = {{Imagining & Sensing: Understanding and Extending the Vocalist-Voice Relationship Through Biosignal Feedback}}, author = {Reed, Courtney N.}, year = {2023}, month = feb, school = {PhD Computer Science, Queen Mary University of London}, }

2022

-

Exploring Experiences with New Musical Instruments through Micro-phenomenologyCourtney N. Reed, Charlotte Nordmoen, Andrea Martelloni, and 6 more authorsIn Proceedings of the International Conference on New Interfaces for Musical Expression, Jun 2022

Exploring Experiences with New Musical Instruments through Micro-phenomenologyCourtney N. Reed, Charlotte Nordmoen, Andrea Martelloni, and 6 more authorsIn Proceedings of the International Conference on New Interfaces for Musical Expression, Jun 2022This paper introduces micro-phenomenology, a research discipline for exploring and uncovering the structures of lived experience, as a beneficial methodology for studying and evaluating interactions with digital musical instruments. Compared to other subjective methods, micro-phenomenology evokes and returns one to the moment of experience, allowing access to dimensions and observations which may not be recalled in reflection alone. We present a case study of five microphenomenological interviews conducted with musicians about their experiences with existing digital musical instruments. The interviews reveal deep, clear descriptions of different modalities of synchronic moments in interaction, especially in tactile connections and bodily sensations. We highlight the elements of interaction captured in these interviews which would not have been revealed otherwise and the importance of these elements in researching perception, understanding, interaction, and performance with digital musical instruments.

@inproceedings{Reed_NIME22_Microphenomenology, title = {{Exploring Experiences with New Musical Instruments through Micro-phenomenology}}, author = {Reed, Courtney N. and Nordmoen, Charlotte and Martelloni, Andrea and Lepri, Giacomo and Robson, Nicole and Zayas-Garin, Eevee and Cotton, Kelsey and Mice, Lia and McPherson, Andrew}, year = {2022}, month = jun, booktitle = {{Proceedings of the International Conference on New Interfaces for Musical Expression}}, address = {The University of Auckland, New Zealand}, articleno = {49}, doi = {10.21428/92fbeb44.b304e4b1}, issn = {2220-4806}, url = {https://doi.org/10.21428%2F92fbeb44.b304e4b1}, presentation-video = {https://youtu.be/-Ket6l90S8I}, } -

Communicating Across Bodies in the Voice LessonCourtney N. ReedIn ACM CHI Workshop on Tangible Interaction for Well-Being, Apr 2022

Communicating Across Bodies in the Voice LessonCourtney N. ReedIn ACM CHI Workshop on Tangible Interaction for Well-Being, Apr 2022In this position paper, I would like to introduce my research on vocalists and their relationships with their bodies, and how the use of haptic feedback can improve these connections and the way we communicate sensory experience. I use the voice lesson and vocal performance as an environment to understand more broadly how people perceive very refined movements which they feel internally. My research seeks to understand how we communicate these sensory experiences in human-to-human interaction and how we can augment or communicate sensory experience through technology. I examine perception of these experiences through different feedback modalities, namely auditory and haptic feedback. Providing new ways to communicate our sensory experiences can lead to improvements in understanding between two individuals (for instance teacher and student). In virtual singing lessons, where the majority of voice study is being done in early 2022, this is especially important, as many of the common ways of interacting with the voice have disappeared with the transition to online interaction.

@inproceedings{Reed_CHI22_CommunicatingBodies, title = {{Communicating Across Bodies in the Voice Lesson}}, author = {Reed, Courtney N.}, year = {2022}, month = apr, booktitle = {{ACM CHI Workshop on Tangible Interaction for Well-Being}}, location = {New Orleans, LA, USA}, } -

Sensory Sketching for SingersCourtney N. ReedIn ACM CHI Workshop on Sketching Across the Senses, Apr 2022

Sensory Sketching for SingersCourtney N. ReedIn ACM CHI Workshop on Sketching Across the Senses, Apr 2022This position paper outlines my study of vocalists and the relationships with the voice as both instrument and part of the body. I study this embodiment through a phenomenological perspective, employing somaesthetics and micro-phenomenology to explore the tacit relationships that singers have with their body. While verbal metaphor is traditionally used to articulate experience in teaching voice, I also use body mapping and material speculation to help articulate tactile and auditory experiences while singing.

@inproceedings{Reed_CHI22_SensorySketching, title = {{Sensory Sketching for Singers}}, author = {Reed, Courtney N.}, year = {2022}, month = apr, booktitle = {{ACM CHI Workshop on Sketching Across the Senses}}, location = {New Orleans, LA, USA}, } -

Singing Knit: Soft Knit Biosensing for Augmenting Vocal PerformancesCourtney N. Reed, Sophie Skach, Paul Strohmeier, and 1 more authorIn Proceedings of the Augmented Humans International Conference 2022, Mar 2022

Singing Knit: Soft Knit Biosensing for Augmenting Vocal PerformancesCourtney N. Reed, Sophie Skach, Paul Strohmeier, and 1 more authorIn Proceedings of the Augmented Humans International Conference 2022, Mar 2022This paper discusses the design of the Singing Knit, a wearable knit collar for measuring a singer’s vocal interactions through surface electromyography. We improve the ease and comfort of multi-electrode bio-sensing systems by adapting knit e-textile methods. The goal of the design was to preserve the capabilities of rigid electrode sensing while addressing its shortcomings, focusing on comfort and reliability during extended wear, practicality and convenience for performance settings, and aesthetic value. We use conductive, silver-plated nylon jersey fabric electrodes in a full rib knit accessory for sensing laryngeal muscular activation. We discuss the iterative design and the material decision-making process as a method for building integrated soft-sensing wearable systems for similar settings. Additionally, we discuss how the design choices through the construction process reflect its use in a musical performance context.

@inproceedings{Reed_AHs22_SingingKnit, title = {{Singing Knit: Soft Knit Biosensing for Augmenting Vocal Performances}}, author = {Reed, Courtney N. and Skach, Sophie and Strohmeier, Paul and McPherson, Andrew P.}, year = {2022}, month = mar, booktitle = {{Proceedings of the Augmented Humans International Conference 2022}}, location = {Kashiwa, Chiba, Japan}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {AHs '22}, pages = {170–183}, numpages = {14}, doi = {10.1145/3519391.3519412}, isbn = {9781450396325}, url = {https://doi.org/10.1145/3519391.3519412}, } -

Vibro-TouchCourtney N. Reed, and Nihar SabnisIn ACM TEI Studio “How Tangible is TEI?” Exploring Swatches as a New Academic Publication Format, Feb 2022

Vibro-TouchCourtney N. Reed, and Nihar SabnisIn ACM TEI Studio “How Tangible is TEI?” Exploring Swatches as a New Academic Publication Format, Feb 2022In our research, we examine tactile representations which are used for user interaction and system notifications. This swatch works as an interface to store and playback vibrotactile stimuli. This allows for easy, cost effective (\~€20) reproduction and exploration of tactile feedback. Typically, feedback designed and used in research is described through written formats. This swatch provides a companion to physically experience the vibrations. The tactile sensation is stored on a microcontroller and played back through a speaker which works as an actuator. The swatch could be given to others to test and experience different sensations in a simplified, modular format. For instance, rather than redesigning feedback for each new study, the tactile feedback could be shared and reproduced for new research. The code on the microcontroller can be changed or updated to have multiple "swatches" in one. In other applications, the swatch could also play audio feedback.

@inproceedings{Reed_TEI22_Vibrotouch, title = {{Vibro-Touch}}, author = {Reed, Courtney N. and Sabnis, Nihar}, year = {2022}, month = feb, booktitle = {{ACM TEI Studio ``How Tangible is TEI?'' Exploring Swatches as a New Academic Publication Format}}, location = {Daejeon, Republic of Korea}, } -

Examining Embodied Sensation and Perception in SingingCourtney N. ReedIn Proceedings of the Sixteenth International Conference on Tangible, Embedded, and Embodied Interaction, Feb 2022

Examining Embodied Sensation and Perception in SingingCourtney N. ReedIn Proceedings of the Sixteenth International Conference on Tangible, Embedded, and Embodied Interaction, Feb 2022This paper introduces my PhD research on the relationship which vocalists have with their voice. The voice, both instrument and body, provides a unique perspective to examine embodied practice. The interaction with the voice is largely without a physical interface and it is difficult to describe the sensation of singing; however, voice pedagogy has been successful at using metaphor to communicate sensory experience between student and teacher. I examine the voice through several different perspectives, including experiential, physiological, and communicative interactions, and explore how we convey sensations in voice pedagogy and how perception of the body is shaped through experience living in it. Further, through externalising internal movement using sonified surface electromyography, I aim to give presence to aspects of vocal movement which have become subconscious or automatic. The findings of this PhD will provide understanding of how we perceive the experience of living within the body and perform through using the body as an instrument.

@inproceedings{Reed_TEI22_EmbodiedSingingDC, title = {{Examining Embodied Sensation and Perception in Singing}}, author = {Reed, Courtney N.}, year = {2022}, month = feb, booktitle = {{Proceedings of the Sixteenth International Conference on Tangible, Embedded, and Embodied Interaction}}, location = {Daejeon, Republic of Korea}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, series = {TEI '22}, articleno = {47}, numpages = {7}, doi = {10.1145/3490149.3503581}, isbn = {9781450391474}, url = {https://doi.org/10.1145/3490149.3503581}, }

2021

-

Exploring XAI for the Arts: Explaining Latent Space in Generative MusicNick Bryan-Kinns, Berker Banar, Corey Ford, and 4 more authorsIn 1st Workshop on eXplainable AI Approaches for Debugging and Diagnosis (XAI4Debugging@NeurIPS2021), Dec 2021

Exploring XAI for the Arts: Explaining Latent Space in Generative MusicNick Bryan-Kinns, Berker Banar, Corey Ford, and 4 more authorsIn 1st Workshop on eXplainable AI Approaches for Debugging and Diagnosis (XAI4Debugging@NeurIPS2021), Dec 2021Explainable AI has the potential to support more interactive and fluid co-creative AI systems which can creatively collaborate with people. To do this, creative AI models need to be amenable to debugging by offering eXplainable AI (XAI) features which are inspectable, understandable, and modifiable. However, currently there is very little XAI for the arts. In this work, we demonstrate how a latent variable model for music generation can be made more explainable; specifically we extend MeasureVAE which generates measures of music. We increase the explainability of the model by: i) using latent space regularisation to force some specific dimensions of the latent space to map to meaningful musical attributes, ii) providing a user interface feedback loop to allow people to adjust dimensions of the latent space and observe the results of these changes in real-time, iii) providing a visualisation of the musical attributes in the latent space to help people understand and predict the effect of changes to latent space dimensions. We suggest that in doing so we bridge the gap between the latent space and the generated musical outcomes in a meaningful way which makes the model and its outputs more explainable and more debuggable.

@inproceedings{BryanKinns_NeurIPS_XAImusic, title = {{Exploring XAI for the Arts: Explaining Latent Space in Generative Music}}, author = {Bryan-Kinns, Nick and Banar, Berker and Ford, Corey and Reed, Courtney N. and Zhang, Yixiao and Colton, Simon and Armitage, Jack}, year = {2021}, month = dec, booktitle = {{1st Workshop on eXplainable AI Approaches for Debugging and Diagnosis (XAI4Debugging@NeurIPS2021)}}, address = {Online}, doi = {https://arxiv.org/abs/2308.05496}, } -

The Role of Auditory Imagery and Altered Auditory Feedback in Singers’ Timing AccuracyCourtney N. Reed, Andrew P. McPherson, and Marcus T. PearceIn Proceedings of the Joint 16th International Conference on Music Perception and Cognition (ICMPC) and 11th Triennial Conference of the European Society for the Cognitive Science of Music (ESCOM), Jul 2021

The Role of Auditory Imagery and Altered Auditory Feedback in Singers’ Timing AccuracyCourtney N. Reed, Andrew P. McPherson, and Marcus T. PearceIn Proceedings of the Joint 16th International Conference on Music Perception and Cognition (ICMPC) and 11th Triennial Conference of the European Society for the Cognitive Science of Music (ESCOM), Jul 2021Auditory imagery allows musicians to recall mental representations of sound and has been linked to better sensorimotor coordination, effective gestural communication with other performers, and the ability to perform with timing accuracy even when auditory feedback is disrupted. The predominance of auditory imagery in the multimodal relationships driving internal temporal models however remains unclear. This study explores how singers adapt to altered auditory feedback (AAF) using auditory imagery. We examine whether auditory imagery ability, measured using the Bucknell Auditory Imagery Scale, affects singers’ ability to maintain temporal accuracy when singing and audiating with AAF and explore the significance of auditory imagery on timing and its role in multimodal imagery. Additionally, we focus on how imagery benefits musicians specifically, comparing timing error in a group of skilled performers.

@inproceedings{Reed_ICMPC21_AAF, title = {{The Role of Auditory Imagery and Altered Auditory Feedback in Singers' Timing Accuracy}}, author = {Reed, Courtney N. and McPherson, Andrew P. and Pearce, Marcus T.}, year = {2021}, month = jul, booktitle = {{Proceedings of the Joint 16th International Conference on Music Perception and Cognition (ICMPC) and 11th Triennial Conference of the European Society for the Cognitive Science of Music (ESCOM)}}, location = {Sheffield, UK}, } -

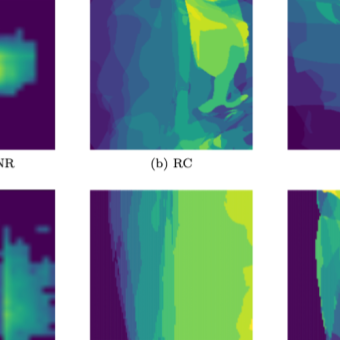

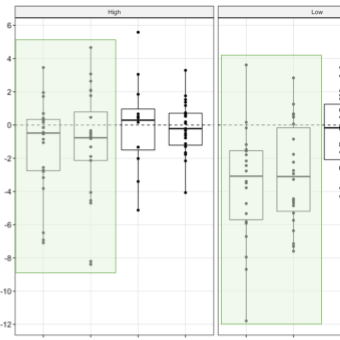

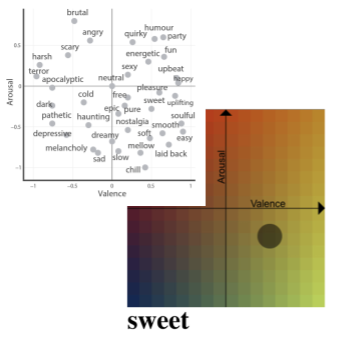

Examining Emotion Perception Agreement in Live Music PerformanceSimin Yang, Courtney N. Reed, Elaine Chew, and 1 more authorIEEE Transactions on Affective Computing, Jun 2021

Examining Emotion Perception Agreement in Live Music PerformanceSimin Yang, Courtney N. Reed, Elaine Chew, and 1 more authorIEEE Transactions on Affective Computing, Jun 2021Current music emotion recognition (MER) systems rely on emotion data averaged across listeners and over time to infer the emotion expressed by a musical piece, often neglecting time- and listener-dependent factors. These limitations can restrict the efficacy of MER systems and cause misjudgements. We present two exploratory studies on music emotion perception. First, in a live music concert setting, fifteen audience members annotated perceived emotion in the valence-arousal space over time using a mobile application. Analyses of inter-rater reliability yielded widely varying levels of agreement in the perceived emotions. A follow-up lab-based study to uncover the reasons for such variability was conducted, where twenty-one participants annotated their perceived emotions whilst viewing and listening to a video recording of the original performance and offered open-ended explanations. Thematic analysis revealed salient features and interpretations that help describe the cognitive processes underlying music emotion perception. Some of the results confirm known findings of music perception and MER studies. Novel findings highlight the importance of less frequently discussed musical attributes, such as musical structure, performer expression, and stage setting, as perceived across audio and visual modalities. Musicians are found to attribute emotion change to musical harmony, structure, and performance technique more than non-musicians. We suggest that accounting for such listener-informed music features can benefit MER in helping to address variability in emotion perception by providing reasons for listener similarities and idiosyncrasies.